AI is starting to Rule the Battlefield

There is no going back, it could even help decide this war

Hi All,

Note—I started writing this midweek update early this morning. I often start on Monday and it take a few days to finish and refine it. However, as I wrote it grew longer and longer, so I’m breaking it down into parts. Here is part 1. Part 2 will come out later in the week—unless another important event/story preempts it—in which case it will be next week. Apologies for the length!

For the midweek update I thought I would touch upon the most important technological development that has come out of the Russo-Ukraine War. This development is not a question of a new system replacing an old (tanks replacing horses, UAVs replacing fixed wing—that sort of thing) but is about how some weapons are being controlled now and perhaps almost all weapons will be controlled in the future.

Yes, I’m talking about the enormous acceleration and acculturation we are seeing in the use of AI to control weapons of war—to the point now that the human is more and more being taken out of the loop. It reveals, once again, that war can make profound and rapid changes on issues that people previously thought they could stop or at least delay with debate for an extended period.

War can potentially change anything: technological, cultural, economic, and ethical. In this case with AI its changed and is changing all of those.

AI and Weapons Before February 24, 2022

AI had been a point of discussion for decades before the Russian full-scale invasion. The debate was to a large degree one of control. Should the ability to decide what, when and how to attack a target always be the preserve of humans—or should it be handled over to AI.

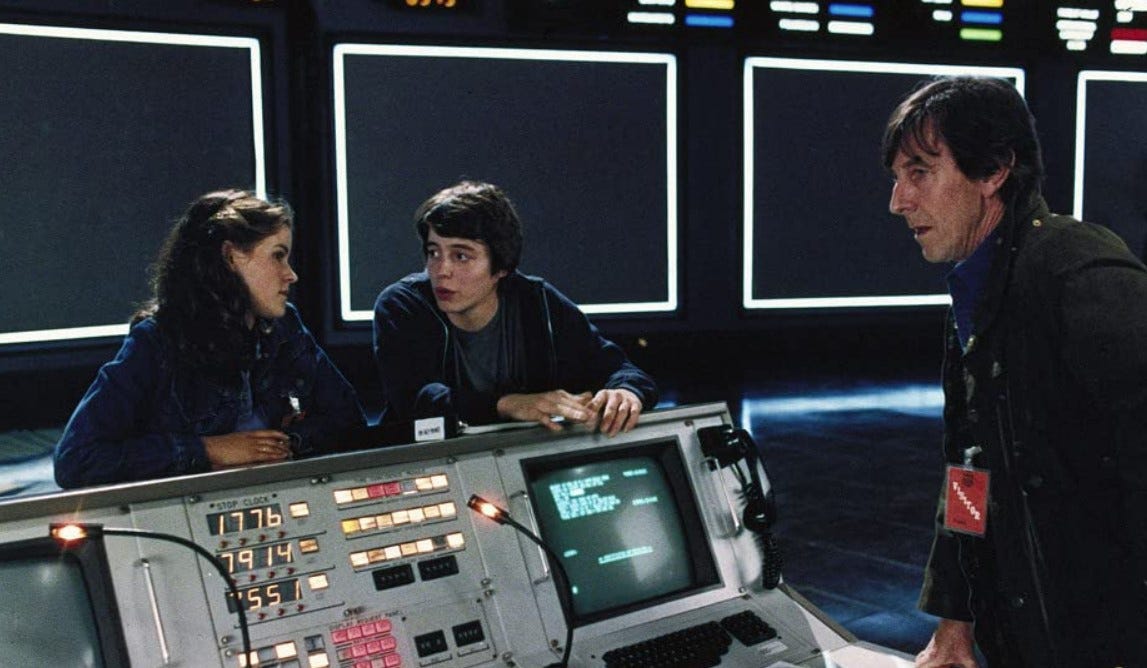

People from my generation probably remember the Matthew Broderick/Ally Sheedy film War Games, which came out in 1983 was decidedly anti-AI. The plot saw the US, worried that human crews would not fire nuclear weapons if war came with the USSR, setting up an AI system called WOPR (War Operation Plan Response) which could decide on its own when to launch. Needless to say the world almost blows up before our human heroes intervene to save the day.

In many ways the debate before Feb 24, 2022 about AI and the control of weapons was the exact same one that played out in War Games. Is it ethical to allow AI to decide when to attack, will it lead to greater errors, war crimes, etc?1 If you want to read a pretty comprehensive overview of the arguments against giving AI control over weapons, you could read this Bulletin of Atomic Scientists article (free online) entitled: Giving an AI control of nuclear weapons: What could possibly go wrong?2 Its worth noting that it was published in early February 2022—just as the Russian army was gearing up to cross the border. Here is a brief excerpt—basically AI cant be trusted.

How autonomous nuclear weapons could go wrong. The huge problem with autonomous nuclear weapons, and really all autonomous weapons, is error. Machine learning-based artificial intelligences—the current AI vogue—rely on large amounts of data to perform a task. Google’s AlphaGo program beat the world’s greatest human go players, experts at the ancient Chinese game that’s even more complex than chess, by playing millions of games against itself to learn the game. For a constrained game like Go, that worked well. But in the real world, data may be biased or incomplete in all sorts of ways. For example, one hiring algorithm concluded being named Jared and playing high school lacrosse was the most reliable indicator of job performance, probably because it picked up on human biases in the data.

These kinds of arguments were widespread, and even regularly made in the Pentagon and MOD’s. Its why the US DOD was always keen to stress that it would keep a human in all decision making loops.3

It seems like such a quaint discussion now. War tends to blow away past worries with its inevitable appetite to destroy the other side. We’ve seen it already with the ease of use of land mines, cluster munitions, etc—all systems which were debated, even called illegal before the full-scale invasion. Now both are ubiquitous on the battlefield.

Keep reading with a 7-day free trial

Subscribe to Phillips’s Newsletter to keep reading this post and get 7 days of free access to the full post archives.